Do you know what makes me really excited about LightGBM?

It's the secret weapon data scientists use in Kaggle contests..

Not only in kaggle contests..

..but also used by organizations especially when handling massive datasets!

yes, LightGBM is super powerful machine learning algorithm!

If you're aspiring to break into data science, (which I assume you are)

..mastering algorithms like LightGBM is a must.

Let me walk you through this - I promise you'll get it!

Key Takeaways :

Before diving in, here are some Key-Takeaways:

- Leaf-Wise Growth Strategy : LightGBM makes predictions super fast using its innovative Leaf-Wise Growth Strategy

- Ideal for Large Datasets : When you implement a LightGBM model in machine learning, you'll notice it's perfect for huge datasets

- LightGBM Vs XGBoost : You'll see LightGBM can run up to 20x faster than similar tools

- Leaf-Wise Tree Growth : LightGBM grows trees leaf-by-leaf, unlike other models that grow level-by-level

- LightGBM Hyperparameters : You can fine-tune it using key settings like num_leaves and learning_rate

- Efficient : While it's similar to XGBoost, it handles big data differently and often more efficiently

What is LightGBM?

Let me break this down for you.

LightGBM (Light Gradient Boosting Machine) is like your personal framework for building predictive models using gradient boosting.

You know what it reminds me of?

Picture an escape room with me: one clue leads to another, and each step builds on the last.

That's exactly how gradient boosting works—it uses decision trees, with each new tree fixing errors from the previous ones.

What makes LightGBM special?

Want to know what makes LightGBM truly unique?

Instead of growing trees level by level (like layers in a cake), it grows them leaf by leaf, focusing on the most impactful areas first. This makes it faster and more accurate, especially for big datasets.

Now that you've got the basics down, ready to explore what makes this tool so special?

Advantages of LightGBM

Remember how I mentioned LightGBM's unique way of growing trees?

This clever approach gives it some serious advantages that'll make your life easier:

- Speed Demon: It blazes through large datasets faster than you can say "gradient boosting"

- Accuracy Master: It's not just quick - it often beats other algorithms while maintaining that speed

- Industry Favorite: Ever heard of Microsoft? Yeah, they created it! They use it, Tencent uses it - the big players love it

Now that you've seen these impressive advantages, you might be wondering, "When exactly should I pull out this powerful tool?"

Let's explore that next...

When to Use LightGBM?

You know how every superhero has their perfect moment to shine?

Well, LightGBM has its sweet spots too.

Let me show you exactly when to pull this ace from your sleeve:

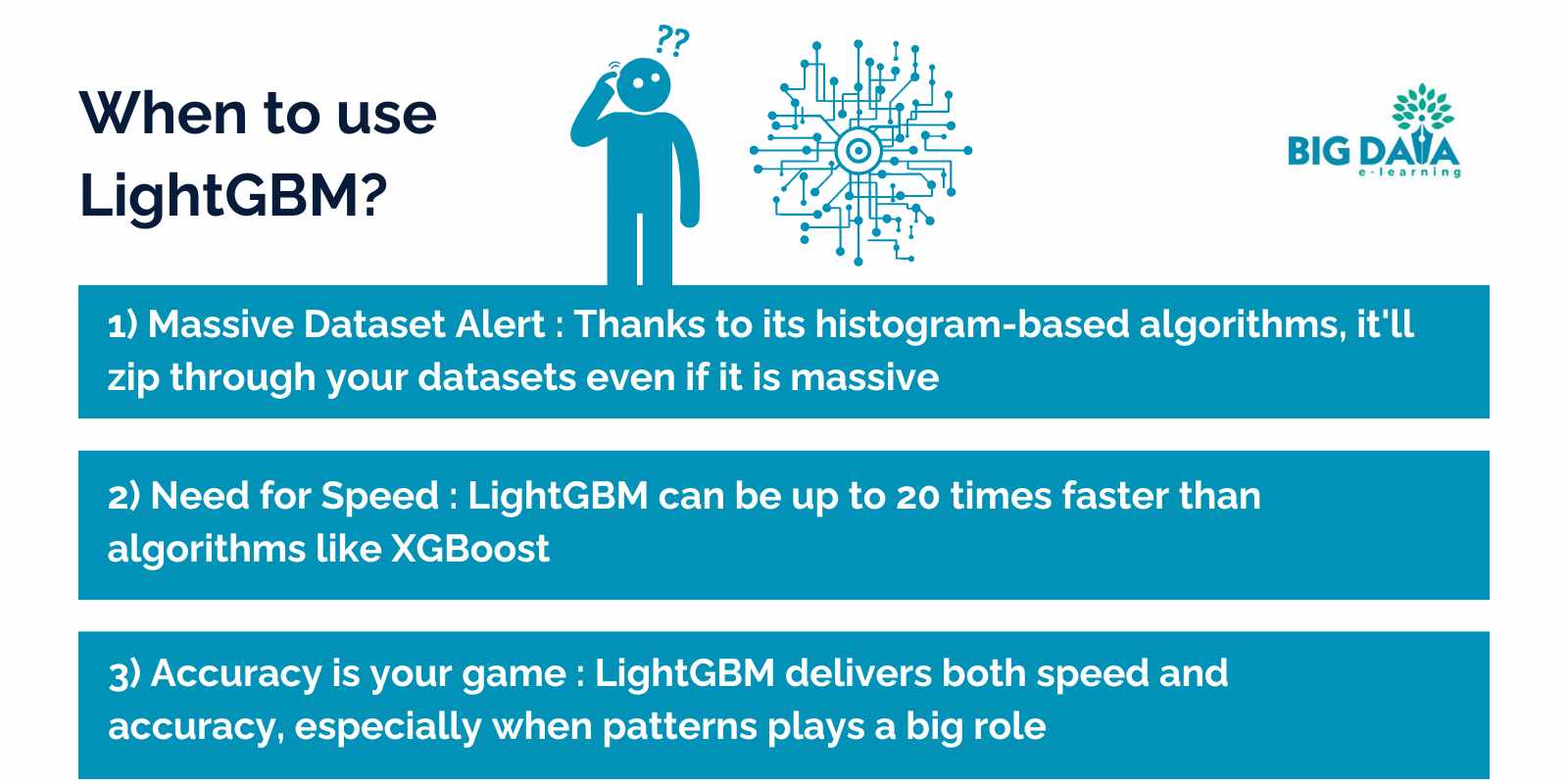

1. Massive Dataset Alert

Got millions of rows and tons of features? This is where LightGBM really struts its stuff. Thanks to its histogram-based algorithms, it'll zip through your data while other algorithms are still warming up.

2. Need for Speed

Here's something that'll blow your mind - LightGBM can be up to 20 times faster than algorithms like XGBoost, especially with big datasets. How? It's all thanks to that leaf-wise growth strategy we talked about earlier.

3. Accuracy is Your Game

Let's face it - in data science, being fast isn't enough. You need accuracy too. And guess what? LightGBM delivers both. It's particularly impressive with structured data where patterns play a big role.

4. Complex Problems

Got a dataset that looks like a maze? LightGBM thrives on complexity. It handles categorical features like a pro and optimizes trees for both depth and breadth. It's like having a Swiss Army knife for intricate datasets.

5. Limited Resources

Here's the best part - you don't need a supercomputer to run LightGBM. It's surprisingly memory-efficient, running smoothly even on smaller systems.

Now that you know when to use it, you're probably wondering how to make it work its best.

That's where hyperparameters come in...

LightGBM Hyperparameters

Think of hyperparameters as the control panel for your LightGBM model.

Understanding LightGBM Hyperparameter Tuning is crucial for success.

The Leaf-Wise Growth Strategy makes this even more important.

As you implement your LightGBM model in machine learning projects, these parameters become critical. This is especially true when considering LightGBM vs. XGBoost performance optimization.

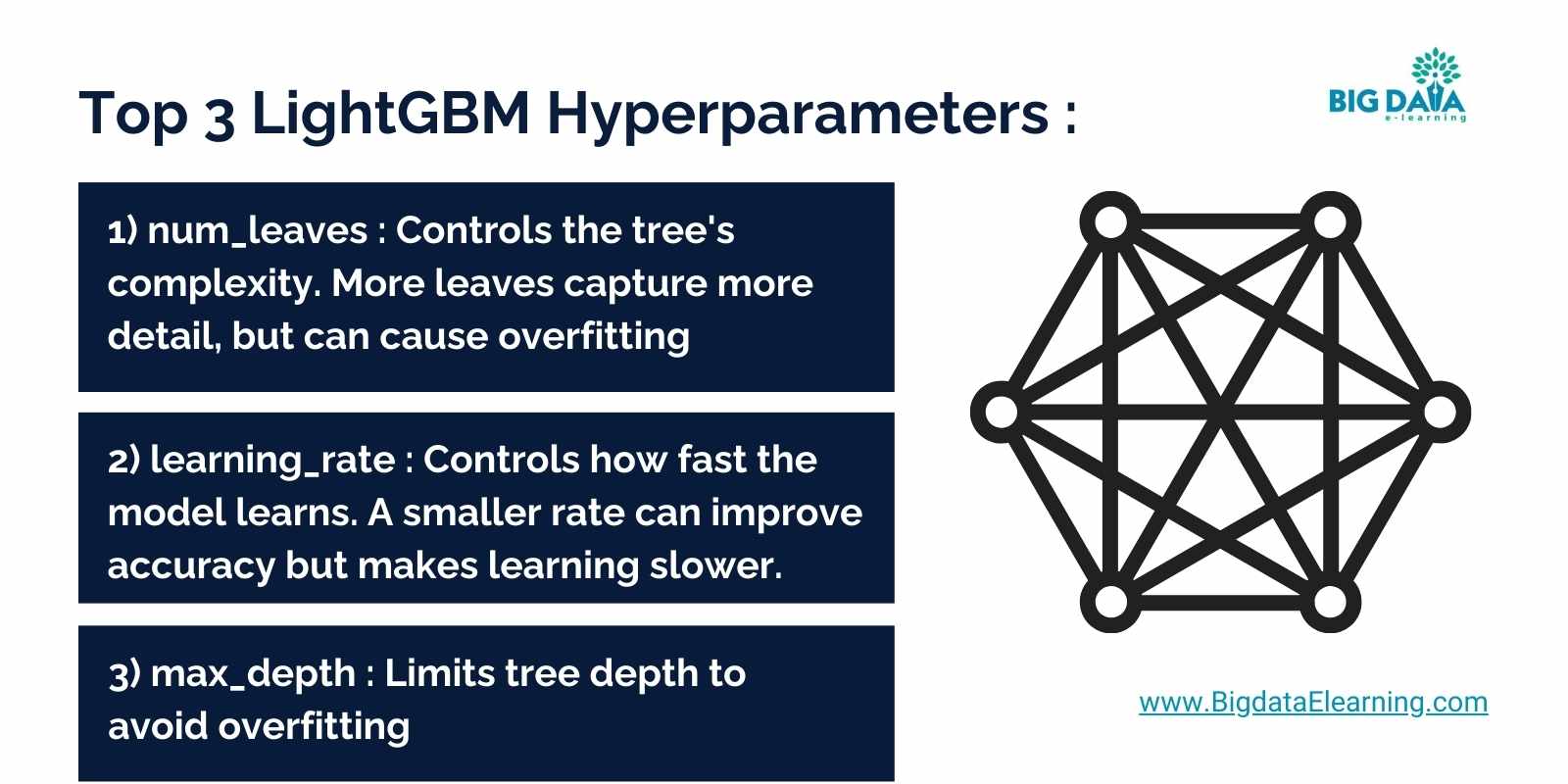

num_leaves

- Why It’s Important: Controls the tree's complexity. More leaves capture more detail, but can cause overfitting.

- Interview Tip: Keep num_leaves less than 2max_depth2^{max\_depth}2max_depth to balance complexity and generalization.

learning_rate

- Why It’s Important: Controls how fast the model learns. A smaller rate can improve accuracy but makes learning slower.

- Interview Tip: Pair a low learning rate (like 0.01) with many boosting rounds (1000+).

max_depth

- Why It’s Important: Limits tree depth to avoid overfitting.

- Interview Tip: Values between 3 and 10 are typical, depending on the dataset.

min_data_in_leaf

- Why It’s Important: Sets the minimum number of samples per leaf. Higher values reduce overfitting.

- Interview Tip: Especially important for large datasets to avoid model instability.

feature_fraction

- Why It’s Important: Randomly selects a fraction of features for each tree, reducing overfitting.

- Interview Tip: Commonly set between 0.7 and 0.9, similar to random forest sampling.

objective

- Why It’s Important: Defines the loss function based on your task (classification or regression).

- Interview Tip: Set it to binary for binary classification, multiclass for multi-class, or regression for predicting numbers.

early_stopping_rounds

- Why It’s Important: Stops training if the model’s performance on the validation set stops improving.

- Interview Tip: Typically set to 50–100 rounds to save time and prevent overfitting.

Speaking of improvements, let's tackle some common questions that might be bouncing around in your head...

FAQ

How does leaf-wise growth differ from level-wise growth?

Level-wise growth adds nodes evenly across all branches, which creates balanced trees but often uses resources inefficiently.

In contrast, leaf-wise growth targets the most valuable branches first, leading to deeper and more optimized trees, with a focus on improving model accuracy.

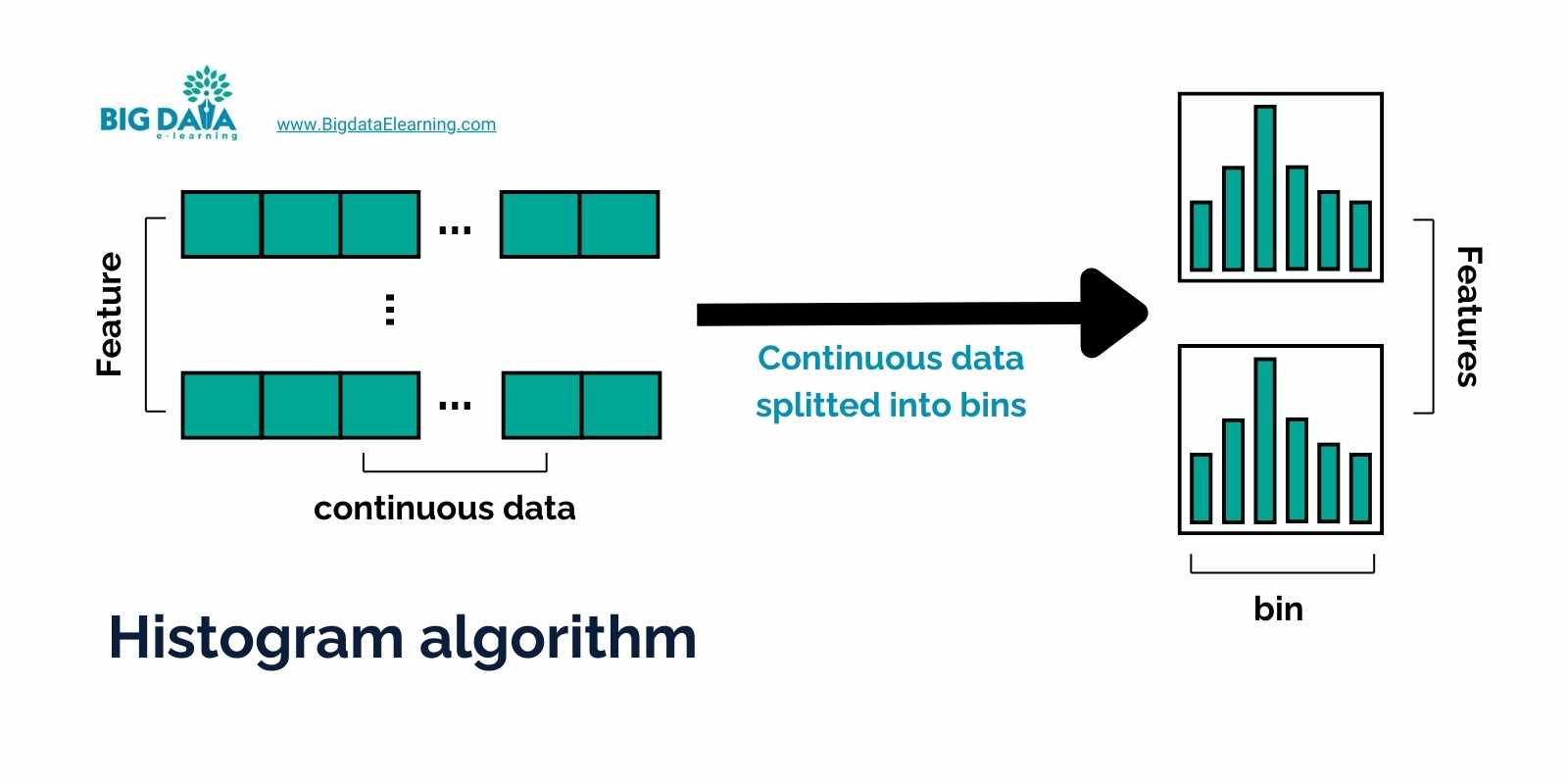

What are histogram-based algorithms?

In LightGBM, histogram-based algorithms split continuous data into bins (like creating histograms). This reduces the number of comparisons needed during splits, speeding up computations while maintaining accuracy.

Fun fact: This technique cuts memory usage by up to 80% compared to traditional methods, which is why LightGBM excels with large datasets.

The success of your LightGBM Hyperparameter Tuning often depends on understanding these common questions.

Many developers ask about the Leaf-Wise Growth Strategy and how to best implement a LightGBM model in machine learning.

Let's also clarify some key points about LightGBM vs. XGBoost.

LightGBM vs. XGBoost

Let me paint you a picture.

Think of XGBoost as a reliable all-terrain SUV - it's been the go-to choice for years and handles most situations well.

LightGBM? It's more like a sleek sports car - optimized for speed, especially with large datasets.

LightGBM’s Edge: The Sports Car

- Speed: LightGBM uses histogram-based algorithms to speed things up, reducing memory usage. It’s 7x faster than XGBoost in some cases.

- Leaf-Wise Growth: It grows trees leaf by leaf, focusing on the best branches, leading to better accuracy but potential overfitting.

- Big Data: LightGBM is ideal for massive datasets with thousands of features, where XGBoost may slow down.

XGBoost’s Strengths: The All-Terrain SUV

- Robustness: XGBoost works well across many types of datasets, making it a solid all-around choice.

- Regularization: Built-in techniques help prevent overfitting, which can make it easier for beginners.

If you need speed with big data, LightGBM is your pick. For versatility and reliability, XGBoost has the edge.

When Should You Choose Which?

Choose LightGBM when you:

- Have huge datasets (millions of rows, thousands of features).

- Need speed and memory efficiency.

Choose XGBoost when you:

- Work with datasets of varying sizes.

- Want built-in regularization to reduce tuning effort.

Conclusion: A Quick Recap

Wow, what a journey we've been on together!

Let me break down everything you've learned:

- First, we explored what makes LightGBM special—a cutting-edge gradient boosting framework that grows trees leaf-by-leaf, focusing on the most impactful areas. This unique strategy sets it apart by making it faster and more accurate than traditional methods.

- Then, we saw the advantages of LightGBM, including its blazing speed, high accuracy, and ability to handle massive datasets with ease.

- Next, we dived into when to use LightGBM, discovering its sweet spots like massive datasets, the need for speed, and situations demanding both precision and efficiency.

- Finally, we unraveled the art of LightGBM hyperparameter tuning and answered common questions, equipping you with the tools to fine-tune your models for peak performance.

- We also compared it with XGBoost, highlighting why LightGBM is the go-to choice for big data scenarios while XGBoost remains a versatile contender.

- In summary, LightGBM is a must-have tool for any data scientist seeking speed, accuracy, and efficiency in their machine learning projects.

I can't wait for you to put all this knowledge into practice!

Remember, every data scientist started exactly where you are now.

The difference is, you've got a solid understanding of one of the most powerful tools in the field.

Stay connected with weekly strategy emails!

Join our mailing list & be the first to receive blogs like this to your inbox & much more.

Don't worry, your information will not be shared.